Acknowledging Ignorance: A Virtue for Humans, A Necessity for Machines

The moment we acknowledge our ignorance, we have already taken a firm step out of the mire of unknowing. — Me :)

The ancient Greek philosopher Socrates, with his classic and profound self-reflection, has opened a window for us to understand the world: "I know one thing, and that is that I know nothing." This statement, like a ray of light at dawn piercing through the night of philosophy, illuminates the boundary between knowledge and ignorance. It not only reflects Socrates' unparalleled humility but also serves as a beacon in discussing the starting points of knowledge, ignorance, and learning.

Honesty is the Starting Point for Improvement, and Acknowledging Ignorance a Manifestation of Wisdom

I have previously recommended a book titled "Mindset: The New Psychology of Success". Written by psychologist Carol S. Dweck, it deeply elaborates the vast differences between a growth mindset and a fixed mindset, and how these mindsets affect an individual's success and development. Professor Dweck's research reveals a simple yet profound truth: Individuals with a growth mindset, those who believe their abilities can be enhanced through effort and learning, tend to achieve more significant accomplishments across various aspects of life.

For those interested in the book, here's a brief lecture by the author. My former boss recently joined OpenAI to lead the growth team and help with ChatGPT's development.

Quantifying Ignorance through Uncertainty

Uncertainty, a concept laden with dual emotions, is both a challenge and an opportunity for explorers. It is not an obstacle but an invitation, encouraging us to examine the world's complexity with a scientific approach.

The advent of probability theory marks a revolutionary leap in how humans understand the world, comparable in importance to Newtonian mechanics or Einstein's theory of relativity. The greatness of probability theory lies not only in expanding our cognitive horizon but also in how it changes our binary view of things being either black or white. It tells us that reality is filled with various possibilities, and absolute certainty is actually quite rare. This notion encourages us to humbly recognize our cognitive limitations and bravely explore the unknown.

Through probability theory, we have learned to make wise predictions and decisions in the face of incomplete information. This principle is valuable not only for human decision-makers but also serves as the cornerstone for building efficient artificial intelligence systems.

How Artificial Intelligence Faces "Ignorance"

In the early stages of artificial intelligence development, the utilization of "ignorance" was not aimed at evasion or neglect but seen as an opportunity for learning and growth. For example, the idea of minimizing the misclassification rate in Bayesian optimal decision-making, and the maximized margin concept used in Support Vector Machines (SVM).

Active Learning: Teach Me What I Don't Know!

Reflecting on my days conducting integrity at Meta/Facebook, we were up against the cunning players of the gray market, who went to great lengths to bypass our detection mechanisms. This posed a significant challenge to our machine learning strategies. Faced with this obstacle, we turned to a strategy known as active learning. In the public internet, this approach might be better known by the moniker "Human-in-the-loop" machine learning.

Active Learning strategies exemplify this mindset. Active Learning is not just a technique or algorithm; it's a philosophy: intelligent systems actively identify their uncertainties in understanding data, revealing their deficiencies and seeking paths to fill knowledge gaps. In this process, machine learning models are both learners and explorers, identifying which data points can most effectively improve their performance by assessing their understanding of the data.

The logic behind this strategy is clear: among a multitude of data, only a few contribute most significantly to the model's learning process. By focusing on these key data points, active learning allows the model to concentrate on absorbing the most critical information, achieving learning objectives more efficiently and with fewer resources. This self-promoting learning method not only accelerates the learning process but also enhances the model's adaptability and accuracy when dealing with unknown data.

Applying active learning strategies to artificial intelligence systems not only improves machine learning efficiency but also endows machines with a human-like ability to learn and adapt: After recognizing their limitations, they actively explore and learn, thereby achieving continuous improvement. This approach represents not just a technological leap but also a philosophical reflection on the field of machine learning. Actively revealing one's weaknesses, in the long run, is a strategy to promote self-improvement and the development of adaptability.

Multi-Arm Bandits: Facing Ignorance Head-On While Avoiding Its Consequences

When you're in Las Vegas, faced with a row of slot machines each with unknown odds and rewards, your challenge is to maximize returns. This is the classic scenario of the Multi-Arm Bandit (MAB) problem, demonstrating the complexity of making optimal choices in an environment full of uncertainties.

In practice, Multi-Arm Bandit algorithms are widely used in scenarios requiring constant choice-making, such as online advertising, recommendation systems, and resource allocation. Their advantage lies in the ability to quickly adapt to changing environments based on real-time feedback, continuously optimizing strategies to achieve long-term goals. This method represents a highly flexible and efficient decision-making approach, enabling machines to remain competitive in complex and variable environments.

Multi-Arm Bandit algorithms tackle this challenge with various strategies (e.g., ε-greedy, Upper Confidence Bound (UCB), and Thompson sampling). While these strategies differ in balancing exploration (exploring new options for information) and exploitation (using known information to maximize returns), their common goal is to optimize the decision-making process. They aim to reduce the costs brought by "ignorance" while bravely facing the unknown and daring to try despite potential failure.

The core of Multi-Arm Bandit algorithms lies in their dynamic adjustment of the decision-making process, finding a balance between the known and unknown. Like active learning, this strategy is based on the machine's "ignorance"—but focuses on minimizing the long-term impact of this ignorance with each decision. Through continuous experimentation and feedback, the algorithm learns which choices are most likely to lead to the best outcomes, thereby optimizing its decision-making process.

Reducing the Risks of Artificial Intelligence Through Acknowledging "Ignorance"

Despite the impressive "erudition" displayed by large language models (LLMs), their answers to unknown or uncertain questions are often speculative. In such situations, we, with our limited knowledge, struggle to discern whether a response is factual or mere fabrication. Enabling AI assistants to recognize their "ignorance," i.e., identifying their knowledge boundaries, can significantly reduce the risks associated with incorrect answers.

A recent paper titled "Can AI Assistants Know What They Don’t Know?" explores this concept by introducing the "I don't know" (Idk) dataset to train LLMs, enabling them to recognize and refuse to answer questions beyond their knowledge scope. This practice not only enhances the accuracy of model responses but also increases the reliability of AI in knowledge-intensive tasks.

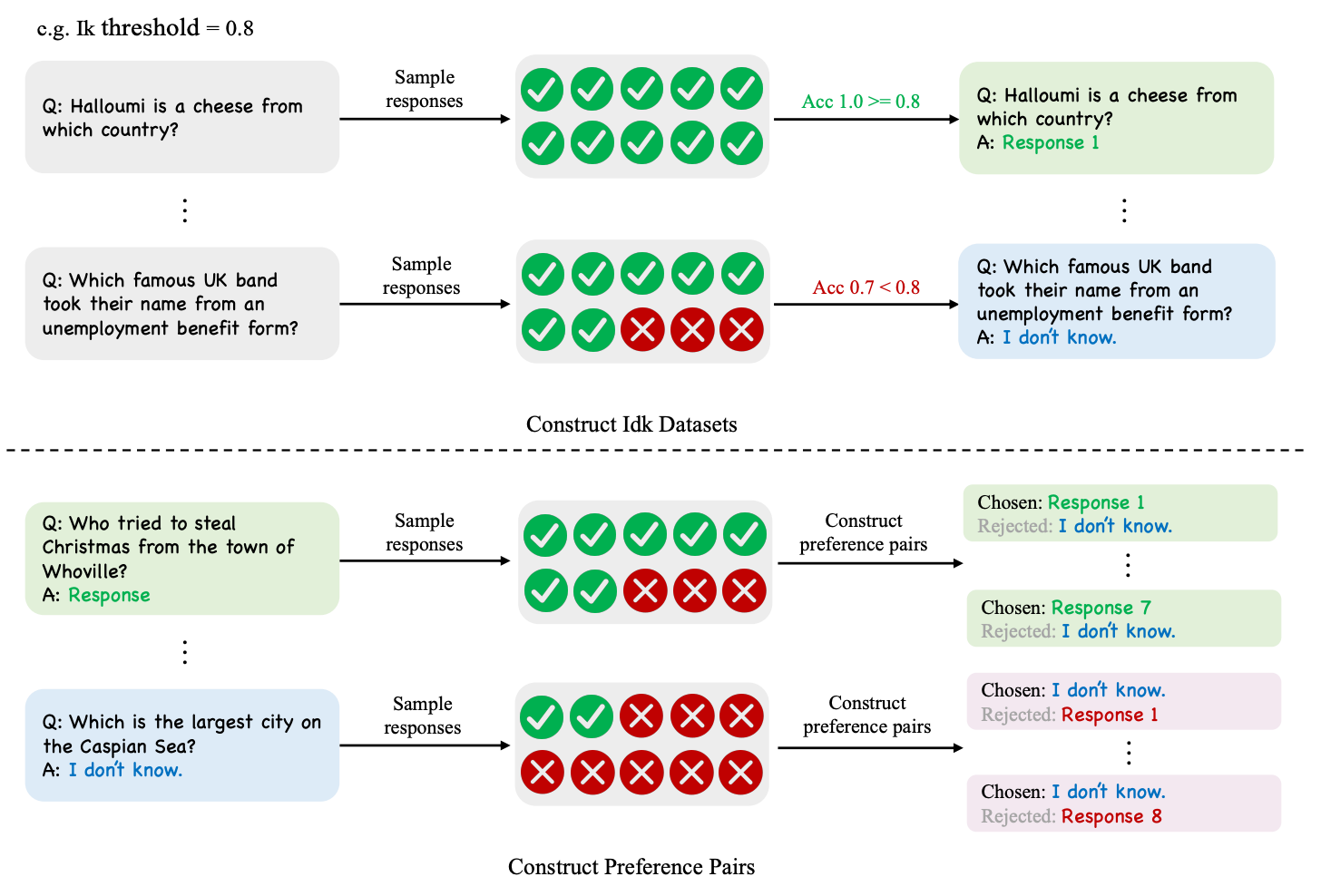

The article begins by discussing the cognitive quadrants to make models aware of their knowledge boundaries. It proposes the creation of an Idk dataset. By constructing an Idk dataset based on specific accuracy thresholds, researchers can define the model's knowledge boundaries. With this dataset, we can achieve the goal of expressing "ignorance" in AI through Idk Prompting, Supervised Fine-tuning (SFT), and Preference-aware Optimization.

Idk Prompting Method: By adding specific prompts before input questions, models capable of following human instructions directly answer "I don't know" to unknown questions. This method is straightforward and requires no additional training, but its effectiveness may be limited for pre-trained models that cannot precisely follow instructions.

Supervised Fine-tuning: Training the model with an Idk dataset that includes questions and their "I don't know" responses, teaching it to recognize which questions exceed its knowledge scope. This method, through standard sequence-to-sequence training techniques, enhances the model's accuracy in judging and answering questions.

Preference-aware Optimization: Initially, perform supervised fine-tuning on half of the Idk dataset as a warm-up, then collect the model's responses on the remaining dataset. After collecting multiple responses for given questions and constructing preference data based on these responses, further fine-tune the model to optimize its decision-making process, enabling it to make more reasonable choices when faced with uncertainty.

The common goal of these methods is to enhance AI's self-awareness, ensuring it opts not to respond when uncertain, thereby improving its overall reliability and accuracy.

In Conclusion

Just as we strive to make machines aware of their knowledge limitations, we should also bravely face and accept our own shortcomings. This self-reflection is not only crucial for personal growth but also foundational for societal progress. Ignoring this could lead us to find that our consciousness and understanding are even less than those of the machines designed to emulate us. Thus, as we pursue technological innovation, let's not forget introspection and self-improvement, ensuring that in the future world, we maintain our leadership in wisdom and consciousness.