Enhanced Robotic Control with Agent, RAG, and Function Calling: A Potential Cheap Solution for Highly Restricted Use Cases?

The dazzling accomplishments of artificial intelligence within the digital realm are well-established, and now, the world's leading tech giants are pivoting their focus toward the application of robotics in the tangible world. Google's PaLM project and Tesla's Optimus robot are prominent examples of this trend.

Last year, I developed a demo program integrating an intelligent agent with a robotic arm (for more details, see "When AI Enters the Physical World: Operating a Robotic Arm with Your Voice, Not Code"), which I shared on the video platform Bilibili (Chinese version of Youtube). The demo's popularity exceeded my expectations, garnering tens of thousands of views in a matter of seconds, and sparked a flood of private messages from viewers eager for access to the source code.

At the time, I felt the program was too rudimentary to release, and the code was hastily written in a repository unrelated to the OpenAI Assistant, so I refrained from making it open-source.

However, encouraged by the enthusiasm of the community, I dedicated some time to create a new repository named MNLM (https://github.com/small-thinking/mnlm), which is devoted to organizing and sharing the relevant code. This repository not only tidies up the code from the initial demo but also introduces new features, such as simulation emulation and instruction set retrieval based on RAG.

The below is an demonstration of the new agent-based robot arm control using RAG + Function Calling. Different from the previous attempt, this time I used the simulated robot arm for demonstration purpose. The same algorithm can be applied to the physical robot arm as well.

For the simulation aspect, I constructed a virtual robotic arm within the ROS2-compatible simulation environment Gazebo. This allows us to carry out operational practices without the need for a physical robotic arm. Aesthetically, the virtual arm might not be pleasing to everyone, but I invite you to appreciate its abstract beauty.

Regarding RAG, it represents an initial exploration of Agent + robotics. My earlier investigations showed that even when utilizing OpenAI's GPT to manage tasks, it faced challenges with domain-specific APIs—for example, those related to a specific robotic arm model—and particular needs such as creating sequences of API calls using natural language. Often, the generated code didn't meet the required standards, and the model found it difficult to handle tasks of even moderate complexity.

MNLM: The Modern Wooden Ox?

MNLM stands for Wooden Ox and Flowing Horse (木牛流马), mechanical inventions attributed to Zhuge Liang (诸葛亮) or his team in the Three-Kingdom era of ancient China, designed for the automatic transportation of food supplies. These devices, crafted from wood and modeled after oxen, could effortlessly carry heavy loads down slopes, hence their name. They symbolize not just technological advancement but also the enduring spirit of innovation.

The name MNLM was chosen rather than directly using 木牛流马 outright because I believe such a significant title should be reserved for a project that truly merits it.

Overall Architecture

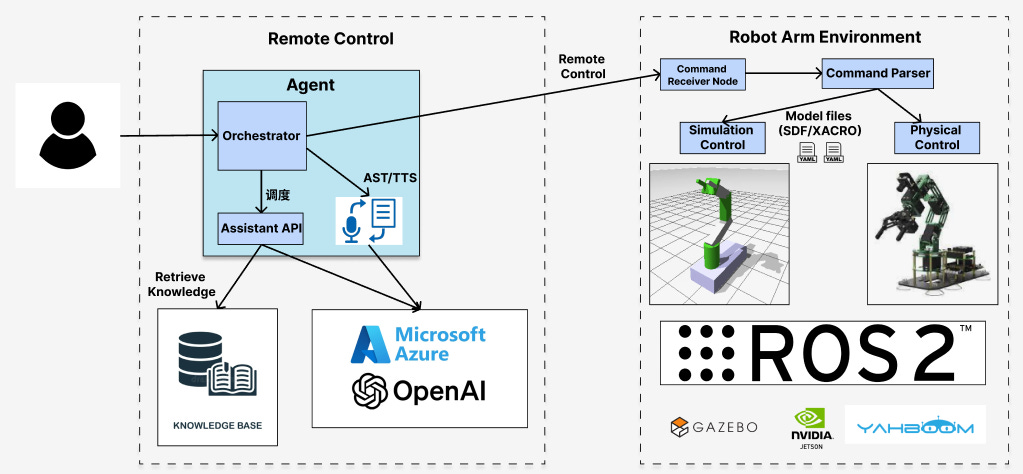

For those interested in delving deeper into the technical details, let's continue. The following diagram outlines the system's overall structure, which I've divided into two main components: the remote control station and the robotic arm.

This architecture was necessitated by the relatively weak performance of my current robotic arm, whose core component is the Nvidia Jetson Nano, equipped with just 2GB of VRAM. This is the most cost-effective GPU-enabled hardware I could find. Running complex Agent programs directly on this modest hardware is impractical. Thus, I've limited the robotic arm to basic physical controls, delegating logic processing tasks to a remote station.

This division of labor is not unique to my project; even tech giants like Google have adopted similar strategies in their PaLM-SayCan project. I had the privilege of attending a lecture by the creators of PaLM-SayCan, who mentioned that their hardware could not meet the demands of hosting all logic processing on the robot itself. Consequently, they also opted to store large models in the cloud, with the robot running a smaller model for execution tasks.

Remote Control: Agent with RAG + Function Calling

The remote control station is essentially my laptop. Currently, the logic for remote control relies on OpenAI's Whisper technology for voice recognition and generation. Alternatively, we could utilize the local solution I discussed in the article "Let's Create a MOSS from The Wandering Earth".

In this update, I've added a knowledge base for the robotic arm locally and employed RAG technology to enhance its ability to execute complex tasks. Although this component is still in the early stages, it's already capable of performing some basic tasks.

In the future, we can embed multiple elemental logic units within RAG, each designed to accomplish a fundamental action and customizable through input parameters. By repeatedly invoking these logic units, the Agent can compile a complete sequence of commands, not only improving the reliability of command generation but also overcoming the limitations inherent in traditional RAG systems.

For instance, when dealing with a complex task, a Large Language Model (LLM) would first decompose it into sufficiently small sub-tasks, each of which is stored in a knowledge base. At this point, the LLM needs to extract templates for these sub-tasks, fill in the necessary parameters, and send them to the remote end. This process effectively combines Retrieval-Augmented Generation (RAG) with function calls.

Robotic Arm End

At the robotic arm end, since most of the logic processing is accomplished through the remote Assistant API, its role primarily involves executing commands. It's crucial to properly decouple the various logic modules to facilitate independent iteration and upgrades. To enhance testing efficiency, I've implemented a command parsing and distribution mechanism, ensuring that the same commands can be applied both in simulation environments and with the actual robotic arm hardware, maintaining consistency and efficiency in operations.

Insights: The Art and Challenge of Technological Integration

The workload of this project far exceeded my initial estimates. My first demo was completed over a long weekend, while this project stretched over a fragmented month. The primary reason, I believe, is the broad spectrum of knowledge required to run a robotic project smoothly. This project has touched upon software architecture, machine learning, modeling, simulation, and robotics, each only scratching the surface, but integrating these diverse technologies has been a significant challenge in itself, not to mention the deeper layers of hardware design. At this point, I must express my admiration for the 'full-stack' capability of people like Zhihui Jun, who start from circuit design and cover all the way to software development.

Architecture is a complex task. Experts in each field might think architects have only superficial knowledge in their specialties. However, an architect's role is to act as a 'glue' between different fields, rather than being an expert in a single domain.

Areas for Improvement

The scope for improvement encompasses every aspects of the project. Take the previously mentioned RAG as an example. The current practice is too rigid, requiring the robotic arm to follow a strict set of commands. If we could make the retrieval content parameterizable and composable, we would grant the Agent greater freedom and flexibility. Naturally, this demands the Agent to have a stronger reasoning capability to decompose complex tasks more thoroughly.

Currently, the robotic arm end is solely responsible for executing commands without any feedback mechanism. In practical operations, the robot might encounter situations that require interaction and correction, necessitating continuous communication between the control and execution ends. How to allow the robot to perform tasks adaptively with minimal communication is a key issue we need to focus on.

The current system lacks visual capabilities. With vision, the adaptability of the robot should significantly improve. Implementing the Yolo algorithm is feasible, even with just 2GB of VRAM. With the recent release of Yolo V9, we have an opportunity to explore this new area. Yolo V9 introduces Programmable Gradient Information (PGI) and the Generalized Efficient Layer Aggregation Network (GELAN), where PGI ensures no data loss and precise gradient updates, and GELAN optimizes the gradient path planning for lightweight models.

The absence of vision capabilities currently limits the robot's adaptability. Incorporating vision should enhance its performance. The 2GB VRAM is sufficient for running a Yolo model, especially with the advent of Yolo V9.

While RAG seems adequate for basic scenarios at this stage, its effectiveness is limited without feedback and adaptive capabilities in the robotic arm. As scenarios grow slightly more complex, there's a clear need for a robotic arm with advanced "intelligence." This will be a focal point of the future work, aiming to evolve the robotic arm from a mere executor of commands into an intelligent partner capable of perception, thought, and autonomous decision-making.

Looking Forward

Following Google and Tesla, Nvidia has also announced its entry into the intelligent robotics arena. Recently, Jim Fan announced he would lead Nvidia's new GEAR team, focusing on embodied intelligence development. If artificial intelligence is to more directly change everyone's life, it must enter the physical world—a trend we have all come to recognize.