"The best way to predict the future is to create it." -- Peter Drucker

How to Sort Numbers?

Suppose we have 100 numbers. How should we sort them in ascending order? Those with a computer science background might think of various solutions like bubble sort, merge sort, quicksort, etc. But have you heard of a simpler, albeit absurd, method? Enter BogoSort.

BogoSort works in a simple yet ridiculous way: it randomly generates a permutation and then checks from the first number to see if each subsequent number is greater. If not, it generates another random permutation, repeating the process until the numbers are sorted. This method is not only extremely inefficient but also directionless, much like monkeys randomly hitting keys on a typewriter, hoping to eventually produce a Shakespearean play.

The Maze of Optimization: Challenges in AI Prompt Engineering

Today, the optimization of prompts for large language models (LLMs) seems to be at a stage akin to BogoSort. Most of the time, we design a few different prompts, evaluate them, and select the one that gives the best result. This heuristic method is not only inefficient but also highly random, making it impossible to guarantee the optimal solution. In this scenario, optimization and evaluation seem to be working independently, lacking effective coordination. As optimization becomes increasingly difficult, this hit-or-miss approach will only become more challenging.

The Initial Formation of an Optimization Loop

As technology advances, we are starting to see hope for changing this situation. The Natural Language Processing group at Stanford University introduced a framework called DSPy (Paper: "DSPy: Compiling Declarative Language Model Calls into Self-Improving Pipelines", details at https://arxiv.org/pdf/2310.03714). This framework introduces a Domain-Specific Language (DSL) for feature engineering, making the optimization based on prompt engineering more systematic.

At first glance, the style of this framework resembles PyTorch. Users can develop it as if they were writing regular Python code. For example, a chain of thought can be written like this:

Systems based on this framework can automate the few-shot learning method. By automatically optimizing prompts, the process of prompt engineering no longer feels as directionless as BogoSort. Although Dspy's optimization still falls within the realm of discrete optimization or grid search, it achieves a more efficient and systematic optimization through the formation of self-improving pipelines.

Differential Optimization for Prompts

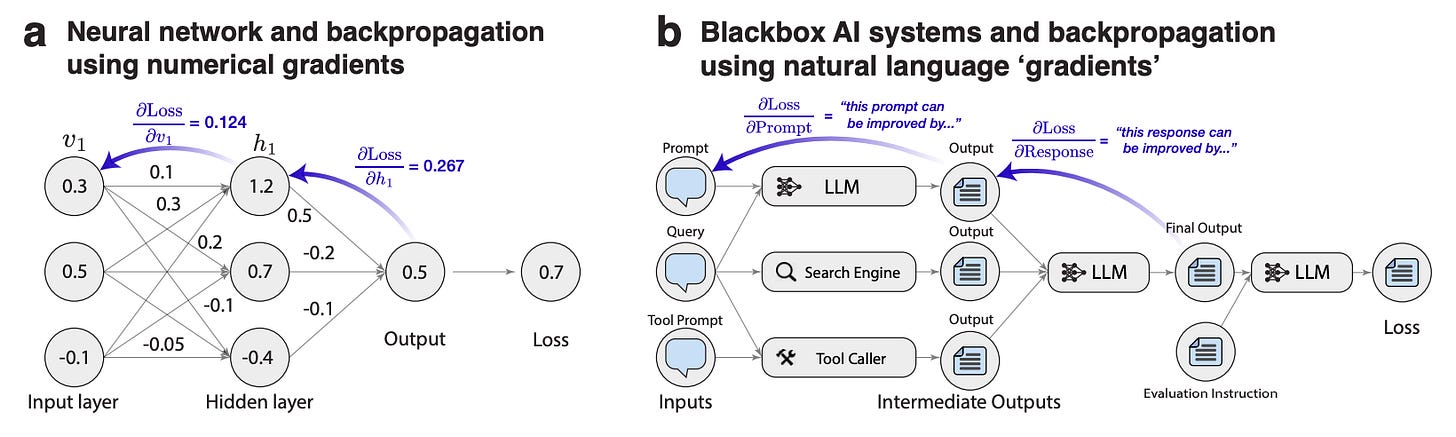

How can we achieve finer-grained optimization? This is exactly the problem that TextGrad aims to solve. TextGrad demonstrates how differential optimization in deep learning can be applied to prompt optimization. TextGrad (Paper: "TextGrad: Automatic 'Differentiation' via Text", details at https://arxiv.org/pdf/2406.07496) works in a way that reminds me of the familiar PyTorch differential optimization system.

By defining an objective function and evaluating the performance of each prompt, we can use optimization algorithms like gradient descent to iteratively adjust the prompts until we find the best solution. This method not only improves efficiency but also makes the optimization process more scientific and systematic.

However, it is important to recognize that the current differential optimization process in TextGrad is still just a mimic of true numeric optimization. The loss function in this context is derived from the output of a language model, which means it is not purely numerical but rather a reflection of textual quality. This inherent difference means the optimization process lacks the controllability, quantifiability, and directionality of traditional numeric optimization methods.

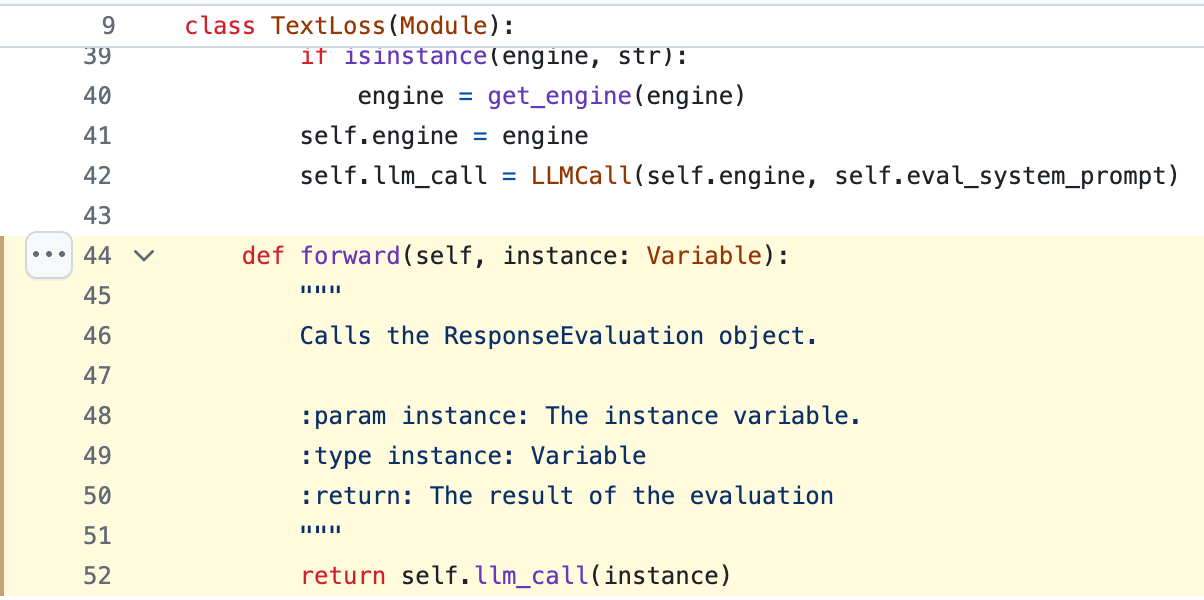

For example, the loss function in TextGrad (see the code pointer: TextGrad Loss Function) highlights that the revised prompt quality may oscillate across iterations. This fluctuation indicates a significant area for improvement. The iterative process can potentially result in prompts that revert to previous states, illustrating the challenges of achieving a stable and optimal solution.

The journey of optimizing prompts through TextGrad is a testament to the exciting possibilities in this field. As we continue to refine these techniques, we can look forward to making the optimization process even more robust, quantifiable, and directional. The potential for achieving consistently improved and stable prompt quality is immense, and we are just beginning to unlock the full capabilities of differential optimization for prompts.

Taking Shape: More Systematic AI System Iterations

From heuristics to grid search to differential optimization, the future of AI prompt optimization is full of possibilities. By borrowing optimization strategies from neural networks, we can bring this field into a new era of intelligence, forming a complete loop system that achieves self-optimization and iteration.

We are witnessing AI prompt optimization transform from simple trial-and-error methods to more scientific and systematic processes. As technology continues to advance, future AI systems will better understand and adapt to user needs, providing more accurate and efficient solutions. This will not only improve the performance of AI systems but also significantly enhance user experience.

Moreover, the development of AI prompt optimization means that we can iterate and improve AI models more quickly. Through continuous optimization and adjustment, AI systems will become increasingly intelligent, capable of handling more complex and diverse tasks. We are moving towards an era where optimization is driven by scientific methods rather than random attempts.

In Conclusion

Despite being in its early stages and resembling the random attempts of BogoSort, AI prompt optimization shows promise with tools like Dspy and TextGrad. By forming feedback loops and introducing differential optimization, we are gradually moving away from the "monkeys typing" era towards a future of intelligent and efficient optimization.

The evolution of AI prompt optimization represents not just a technological breakthrough but a shift in mindset. We are moving from random attempts to systematic, scientific optimization methods, much like progressing from a primitive era to a civilized one. This journey will continue to drive the development of AI technology, making our lives more convenient and intelligent.

It feels like raw prompting is the assembly language of the LLM era, with more higher level abstractions to interface with the LLM for optimized results. Surprisingly I have not seen many startups in this space?